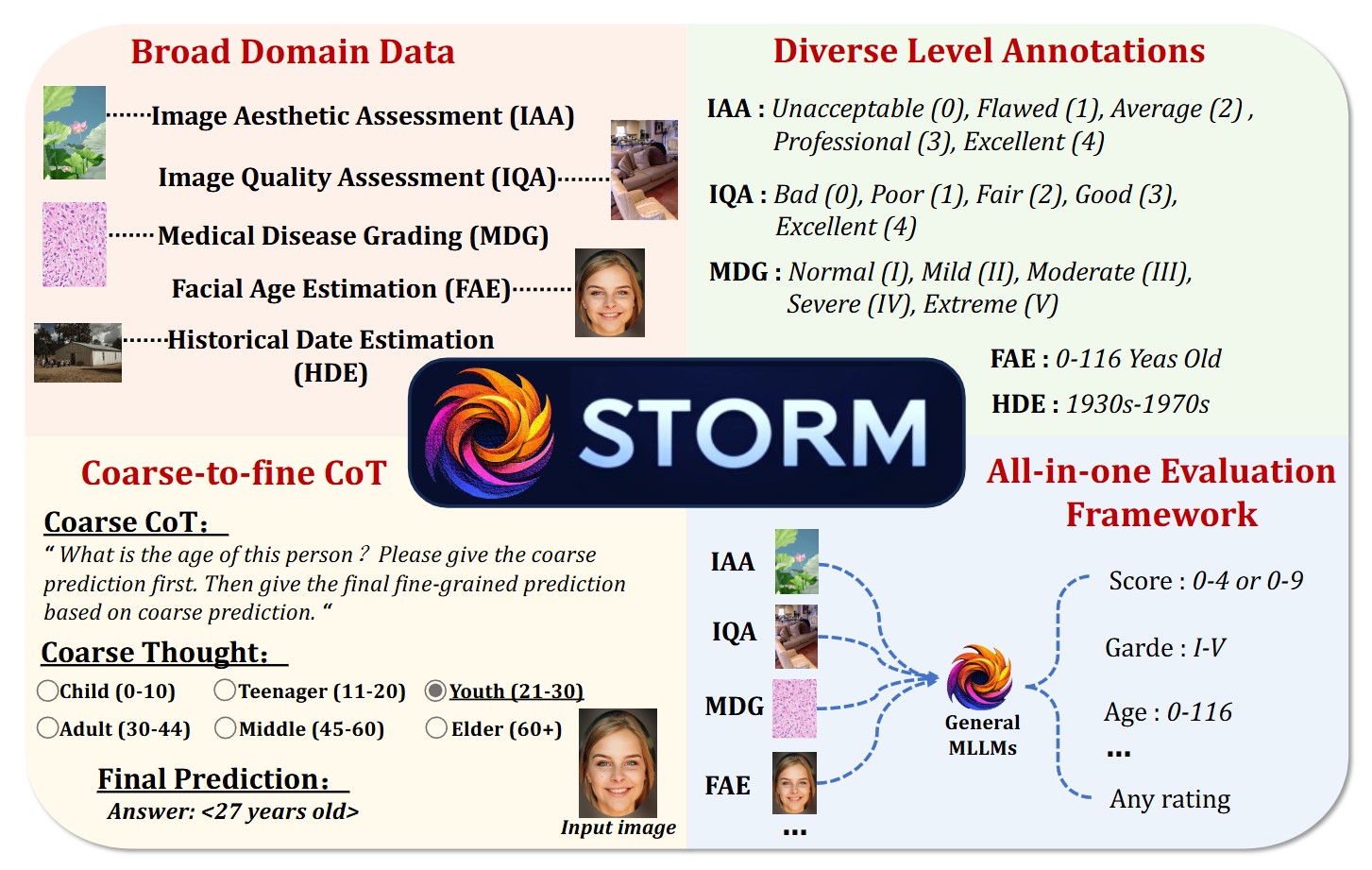

Visual rating is an essential capability of artificial intelligence (AI) for multidimensional quantification of visual content, primarily applied in ordinal regression (OR) tasks such as image quality assessment, facial age estimation, and medical image grading. However, current multi-modal large language models (MLLMs) under-perform in such visual rating ability while also suffering the lack of relevant datasets and benchmarks. In this work, we collect and present STORM, a data collection and benchmark for Stimulating Trustworthy Ordinal Regression Ability of MLLMs for universal visual rating. STORM encompasses 14 ordinal regression datasets across five common visual rating domains, comprising 655K image-level pairs and the corresponding carefully curated VQAs. Importantly, we also propose a coarse-to-fine processing pipeline that dynamically considers label candidates and provides interpretable thoughts, providing MLLMs with a general and trustworthy ordinal thinking paradigm. This benchmark aims to evaluate the all-in-one and zero-shot performance of MLLMs in scenarios requiring understanding of the essential common ordinal relationships of rating labels. Extensive experiments demonstrate the effectiveness of our framework and shed light on better fine-tuning strategies. The STORM dataset, benchmark, and pre-trained models are available on the following webpage to support further research in this area.

@misc{wang2025stormbenchmarkingvisualrating,

title={STORM: Benchmarking Visual Rating of MLLMs with a Comprehensive Ordinal Regression Dataset},

author={Jinhong Wang and Shuo Tong and Jian liu and Dongqi Tang and Jintai Chen and Haochao Ying and Hongxia Xu and Danny Chen and Jian Wu},

year={2025},

eprint={2506.01738},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2506.01738},

}